Projects

Joint Optimization for 4D Human-Scene Reconstruction in the Wild

Zhizheng Liu, Joe Lin, Wayne Wu, Bolei Zhou

Preprint (arXiv) , 2025

[Paper] [Project Page] [Code (coming soon!)]

Zhizheng Liu, Joe Lin, Wayne Wu, Bolei Zhou

Preprint (arXiv) , 2025

[Paper] [Project Page] [Code (coming soon!)]

Learning to Generate Diverse Pedestrian Movements from Web Videos with Noisy Labels

Zhizheng Liu, Joe Lin, Wayne Wu, Bolei Zhou

International Conference on Learning Representations (ICLR) , 2025

[Paper] [Project Page] [Code]

Zhizheng Liu, Joe Lin, Wayne Wu, Bolei Zhou

International Conference on Learning Representations (ICLR) , 2025

[Paper] [Project Page] [Code]

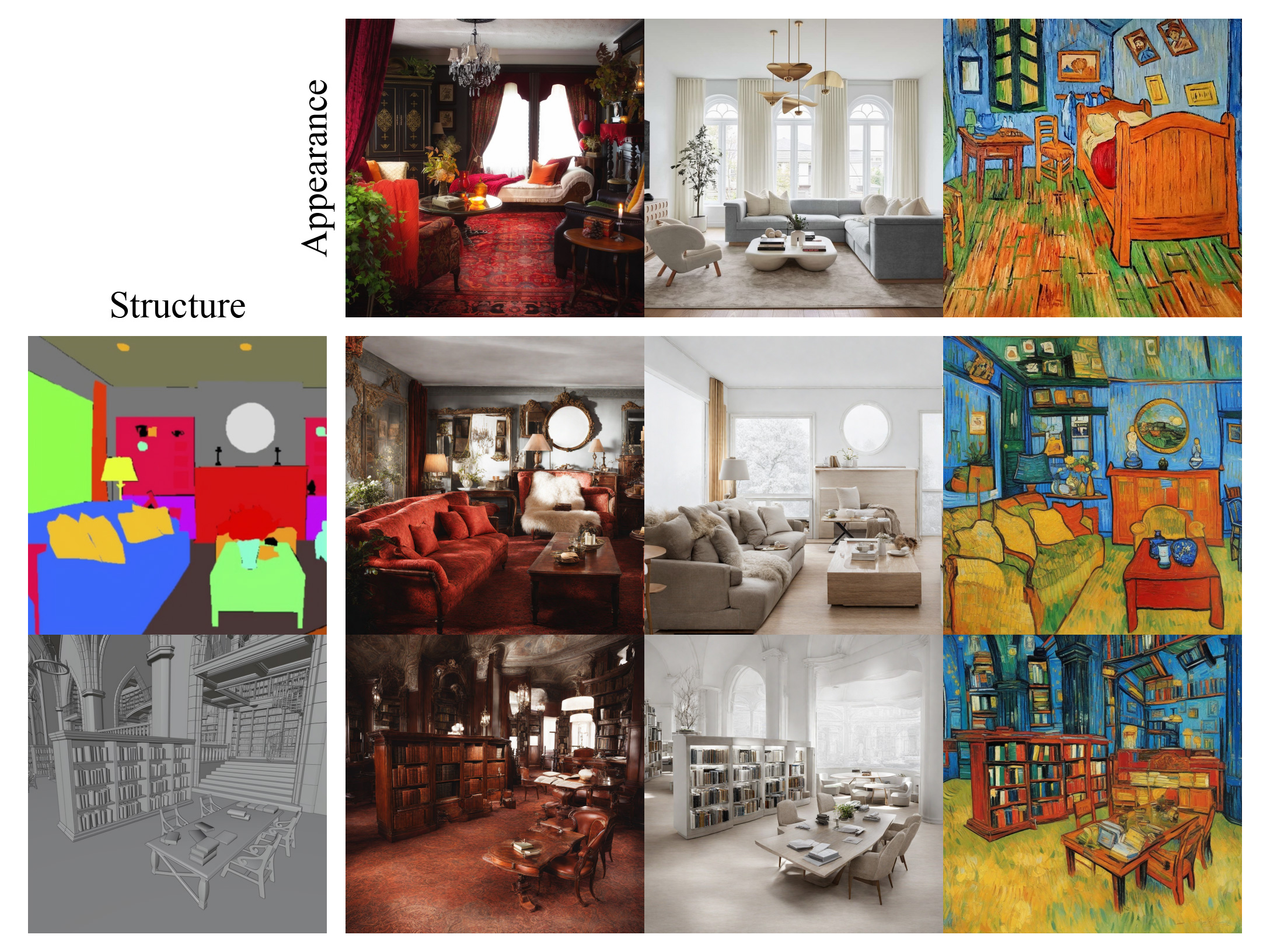

Ctrl-X: Controlling Structure and Appearance for Text-To-Image Generation Without Guidance

Kuan Heng Lin*, Sicheng Mo*, Ben Klingher, Fangzhou Mu, Bolei Zhou

Neural Information Processing Systems (NeurIPS), 2024

[Paper] [Project Page] [Code]

Kuan Heng Lin*, Sicheng Mo*, Ben Klingher, Fangzhou Mu, Bolei Zhou

Neural Information Processing Systems (NeurIPS), 2024

[Paper] [Project Page] [Code]

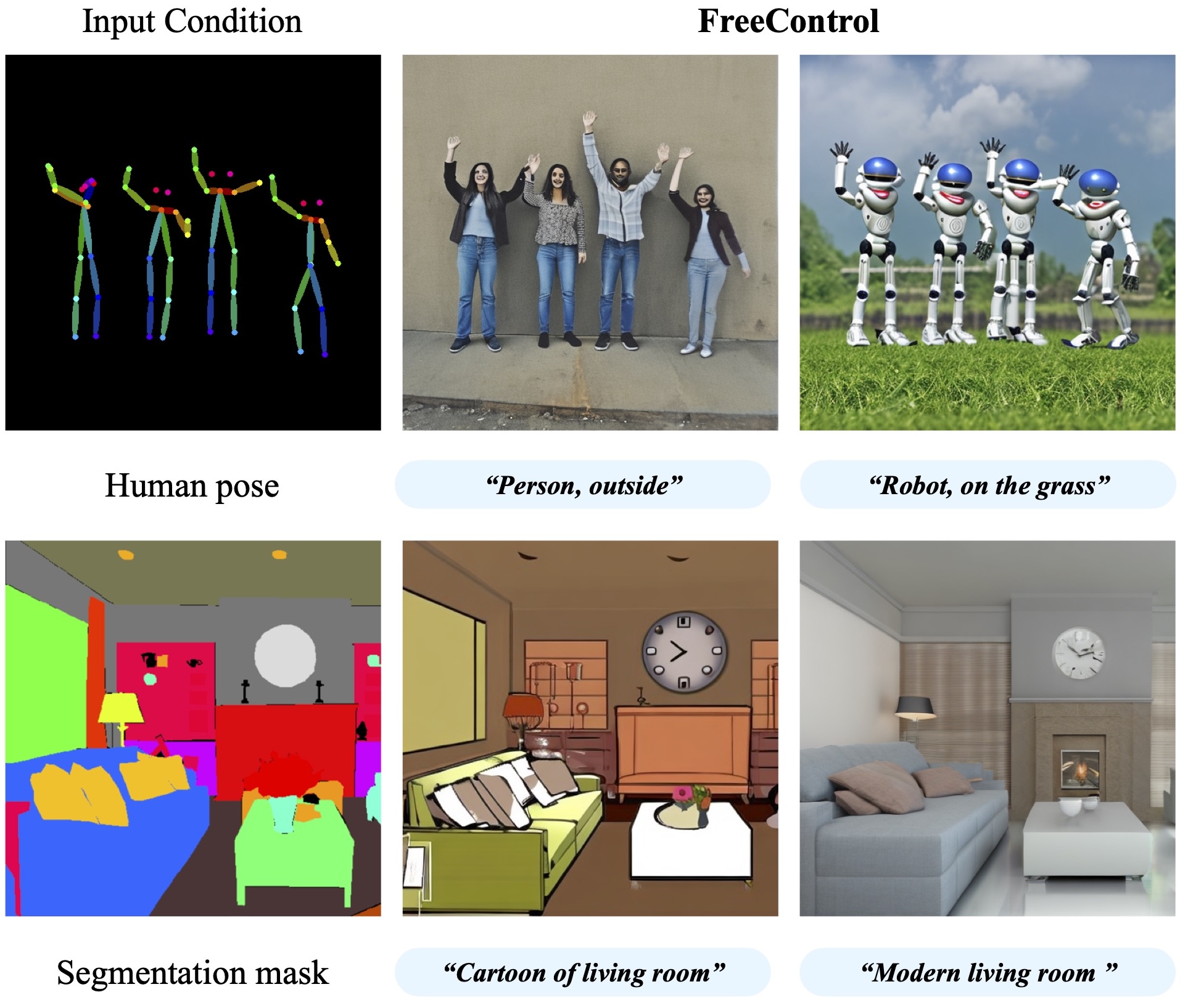

FreeControl: Training-Free Spatial Control of Any Text-to-Image Diffusion Model with Any Condition

Sicheng Mo*, Fangzhou Mu*, Kuan Heng Lin, Yanli Liu, Bochen Guan, Yin Li, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2024

[Paper] [Project Page] [Code]

Sicheng Mo*, Fangzhou Mu*, Kuan Heng Lin, Yanli Liu, Bochen Guan, Yin Li, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2024

[Paper] [Project Page] [Code]

Previous Projects

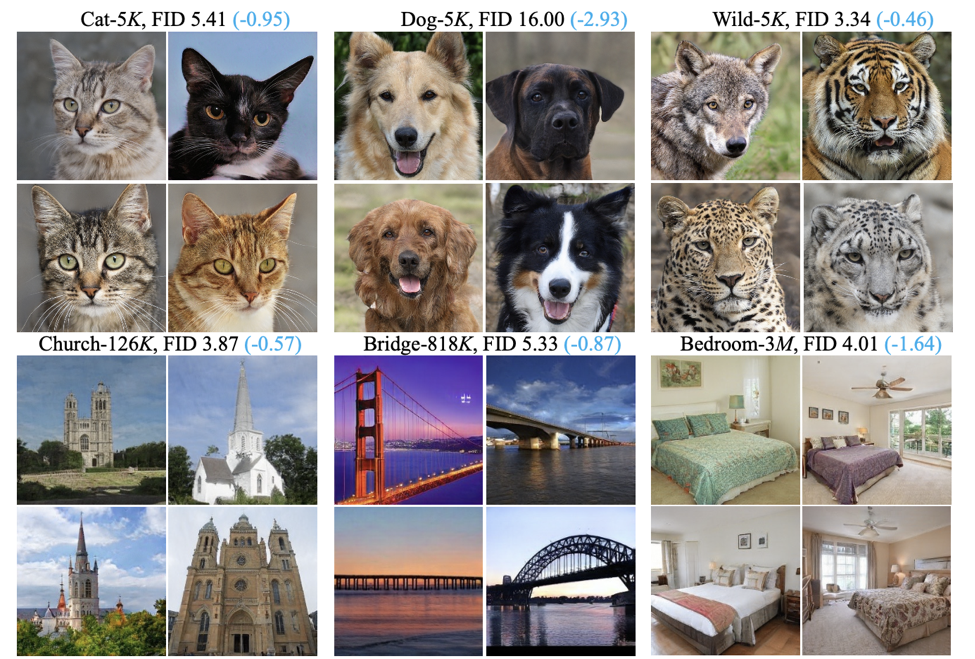

Improving GANs with A Dynamic Discriminator

Ceyuan Yang*, Yujun Shen*, Yinghao Xu, Deli Zhao, Bo Dai, Bolei Zhou

Neural Information Processing Systems (NeurIPS), 2022

[Paper] [Project Page] [Code]

Ceyuan Yang*, Yujun Shen*, Yinghao Xu, Deli Zhao, Bo Dai, Bolei Zhou

Neural Information Processing Systems (NeurIPS), 2022

[Paper] [Project Page] [Code]

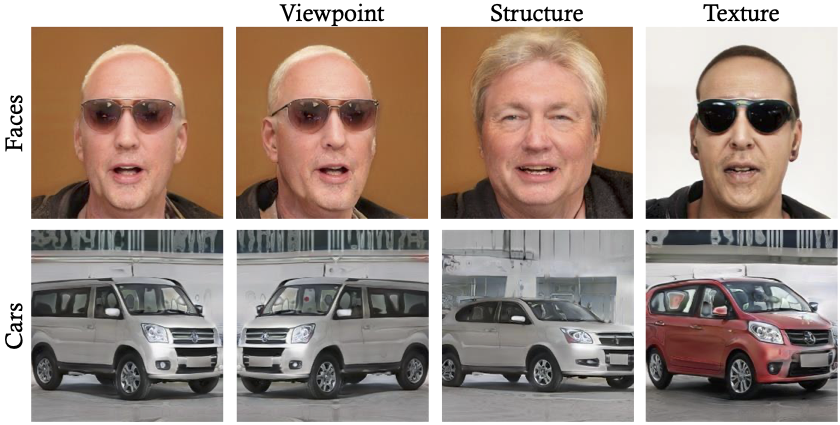

3D-aware Image Synthesis via Learning Structural and Textural Representations

Yinghao Xu, Sida Peng, Ceyuan Yang, Yujun Shen, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2022

[Paper] [Project Page] [Code] [Demo]

Yinghao Xu, Sida Peng, Ceyuan Yang, Yujun Shen, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2022

[Paper] [Project Page] [Code] [Demo]

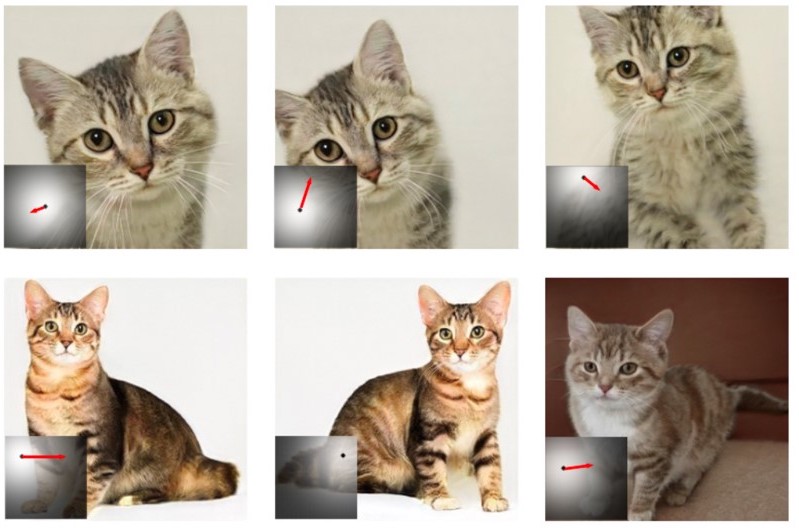

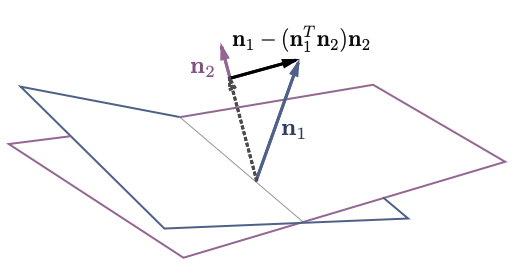

Improving GAN Equilibrium by Raising Spatial Awareness

Jianyuan Wang, Ceyuan Yang, Yinghao Xu, Yujun Shen, Hongdong Li, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2022

[Paper] [Project Page] [Code] [Demo]

Jianyuan Wang, Ceyuan Yang, Yinghao Xu, Yujun Shen, Hongdong Li, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2022

[Paper] [Project Page] [Code] [Demo]

One-Shot Generative Domain Adaptation

Ceyuan Yang*, Yujun Shen*, Zhiyi Zhang, Yinghao Xu, Jiapeng Zhu, Zhirong Wu, Bolei Zhou

International Conference on Computer Vision (ICCV), 2023

[Paper] [Project Page] [Code]

Ceyuan Yang*, Yujun Shen*, Zhiyi Zhang, Yinghao Xu, Jiapeng Zhu, Zhirong Wu, Bolei Zhou

International Conference on Computer Vision (ICCV), 2023

[Paper] [Project Page] [Code]

Data-Efficient Instance Generation from Instance Discrimination

Ceyuan Yang, Yujun Shen, Yinghao Xu, Bolei Zhou

Neural Information Processing Systems (NeurIPS), 2021

[Paper] [Project Page] [Code]

Ceyuan Yang, Yujun Shen, Yinghao Xu, Bolei Zhou

Neural Information Processing Systems (NeurIPS), 2021

[Paper] [Project Page] [Code]

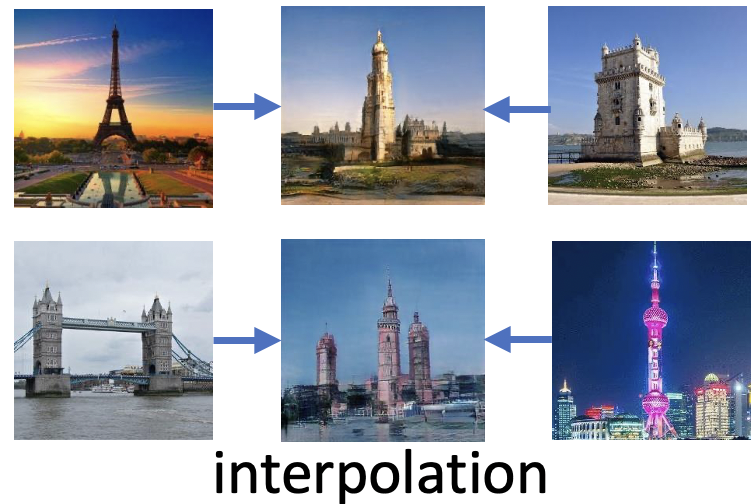

Unsupervised Image Transformation Learning via Generative Adversarial Networks

Kaiwen Zha, Yujun Shen, Bolei Zhou

arXiv.2103.07751 preprint

[Paper] [Project Page] [Code] [Demo]

Kaiwen Zha, Yujun Shen, Bolei Zhou

arXiv.2103.07751 preprint

[Paper] [Project Page] [Code] [Demo]

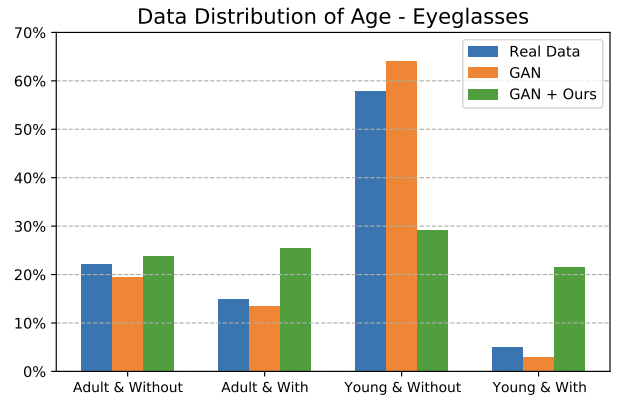

Improving the Fairness of Deep Generative Models without Retraining

Shuhan Tan, Yujun Shen, Bolei Zhou

arXiv.2012.04842 preprint

[Paper] [Project Page] [Code] [Colab]

Shuhan Tan, Yujun Shen, Bolei Zhou

arXiv.2012.04842 preprint

[Paper] [Project Page] [Code] [Colab]

Generative Hierarchical Features from Synthesizing Images

Yinghao Xu*, Yujun Shen*, Jiapeng Zhu, Ceyuan Yang, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2021 (Oral)

[Paper] [Project Page] [Code]

Yinghao Xu*, Yujun Shen*, Jiapeng Zhu, Ceyuan Yang, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2021 (Oral)

[Paper] [Project Page] [Code]

Closed-Form Factorization of Latent Semantics in GANs

Yujun Shen, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2021 (Oral)

[Paper] [Project Page] [Code] [Demo]

Yujun Shen, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2021 (Oral)

[Paper] [Project Page] [Code] [Demo]

InterFaceGAN: Interpreting the Disentangled Face Representation Learned by GANs

Yujun Shen, Ceyuan Yang, Xiaoou Tang, Bolei Zhou

IEEE Transactions on Pattern Recognition (TPAMI), Oct 2020

[Paper] [Project Page] [Code] [Demo]

Yujun Shen, Ceyuan Yang, Xiaoou Tang, Bolei Zhou

IEEE Transactions on Pattern Recognition (TPAMI), Oct 2020

[Paper] [Project Page] [Code] [Demo]

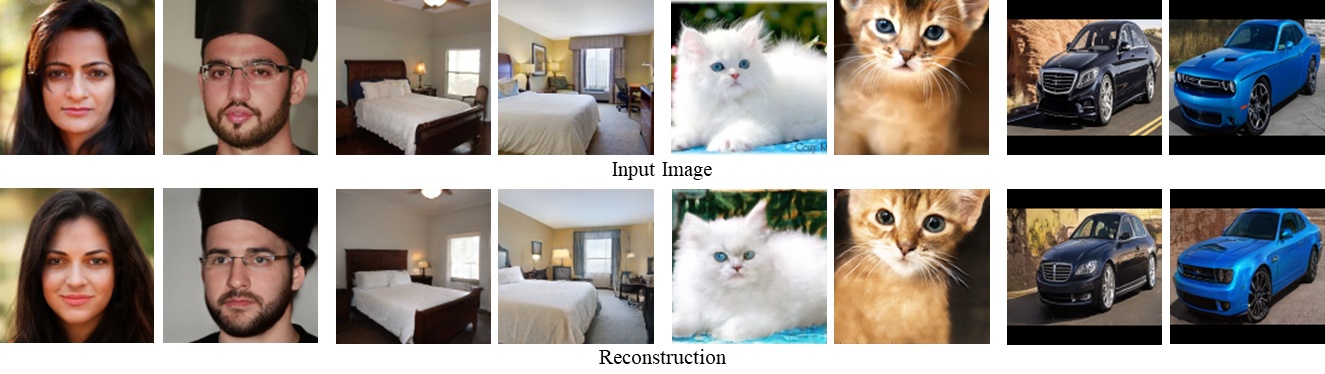

In-Domain GAN Inversion for Real Image Editing

Jiapeng Zhu*, Yujun Shen*, Deli Zhao, Bolei Zhou

European Conference on Computer Vision (ECCV), 2020

[Paper] [Project Page] [Code] [Demo] [Colab]

Jiapeng Zhu*, Yujun Shen*, Deli Zhao, Bolei Zhou

European Conference on Computer Vision (ECCV), 2020

[Paper] [Project Page] [Code] [Demo] [Colab]

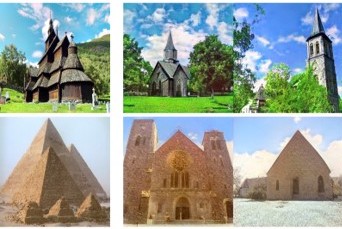

Semantic Hierarchy Emerges in Deep Generative Representations for Scene Synthesis

Ceyuan Yang*, Yujun Shen*, Bolei Zhou

International Journal of Computer Vision (IJCV), 31 Dec 2020

[Paper] [Project Page] [Code] [Demo]

Ceyuan Yang*, Yujun Shen*, Bolei Zhou

International Journal of Computer Vision (IJCV), 31 Dec 2020

[Paper] [Project Page] [Code] [Demo]

Image Processing Using Multi-Code GAN Prior

Jinjin Gu, Yujun Shen, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2020

[Paper] [Project Page] [Code]

Jinjin Gu, Yujun Shen, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2020

[Paper] [Project Page] [Code]

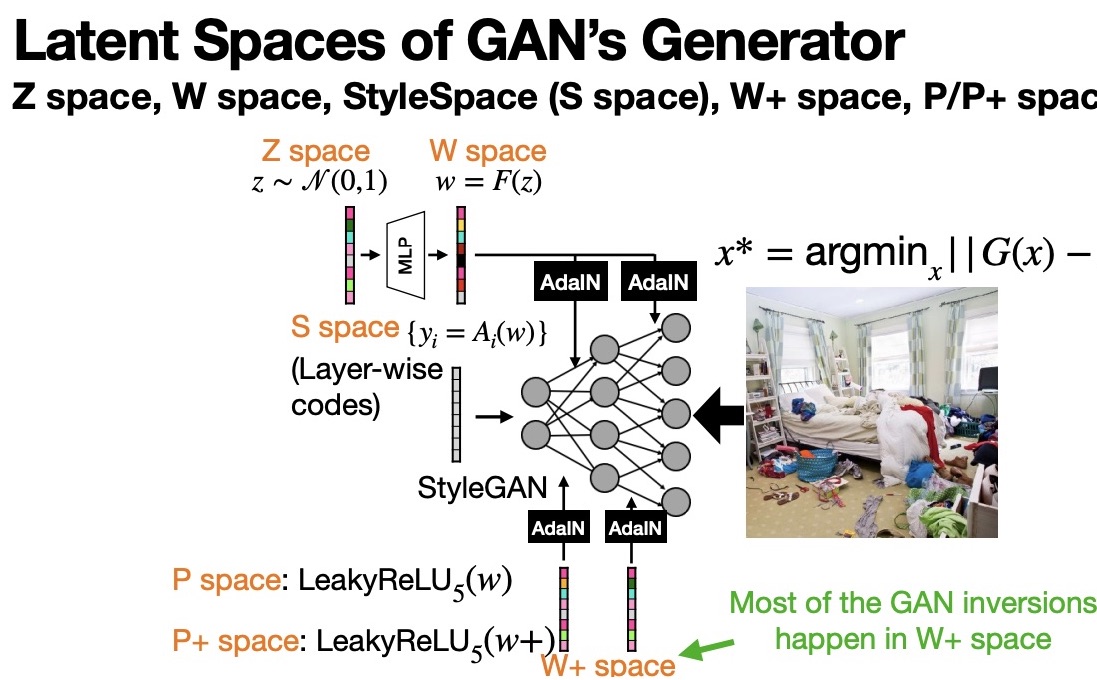

Interpreting the Latent Space of GANs for Semantic Face Editing

Yujun Shen, Jinjin Gu, Xiaoou Tang, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2020

[Paper] [Project Page] [Code] [Demo]

Yujun Shen, Jinjin Gu, Xiaoou Tang, Bolei Zhou

Computer Vision and Pattern Recognition (CVPR), 2020

[Paper] [Project Page] [Code] [Demo]

Tutorials

Interpreting Deep Generative Models for Interactive AI Content Creation

Bolei Zhou

Interpretable Machine Learning for Computer Vision (CVPR'21 Tutorial)

[Video (YouTube)] [Video (bilibili)] [Slides]

Bolei Zhou

Interpretable Machine Learning for Computer Vision (CVPR'21 Tutorial)

[Video (YouTube)] [Video (bilibili)] [Slides]

Exploring and Exploiting Interpretable Semantics in GANs

Bolei Zhou

Interpretable Machine Learning for Computer Vision (CVPR'20 Tutorial)

[Video (YouTube)] [Video (bilibili)] [Slides]

Bolei Zhou

Interpretable Machine Learning for Computer Vision (CVPR'20 Tutorial)

[Video (YouTube)] [Video (bilibili)] [Slides]

Interpreting and Exploiting the Latent Space of GANs

Yujun Shen

Invited Talk by 机器之心

[Video (in Chinese)]

Yujun Shen

Invited Talk by 机器之心

[Video (in Chinese)]

Team

| Principal Investigator |

Bolei Zhou

|

||||

| Previous Team Members |

Yujun Shen

|

Ceyuan Yang

|

Jiapeng Zhu

|

Yinghao Xu

|

Shuhan Tan

|