1 CUHK,

2 Ant Group,

3 Shanghai AI Laboratory,

4 UCLA

|

@article{yang2022improving,

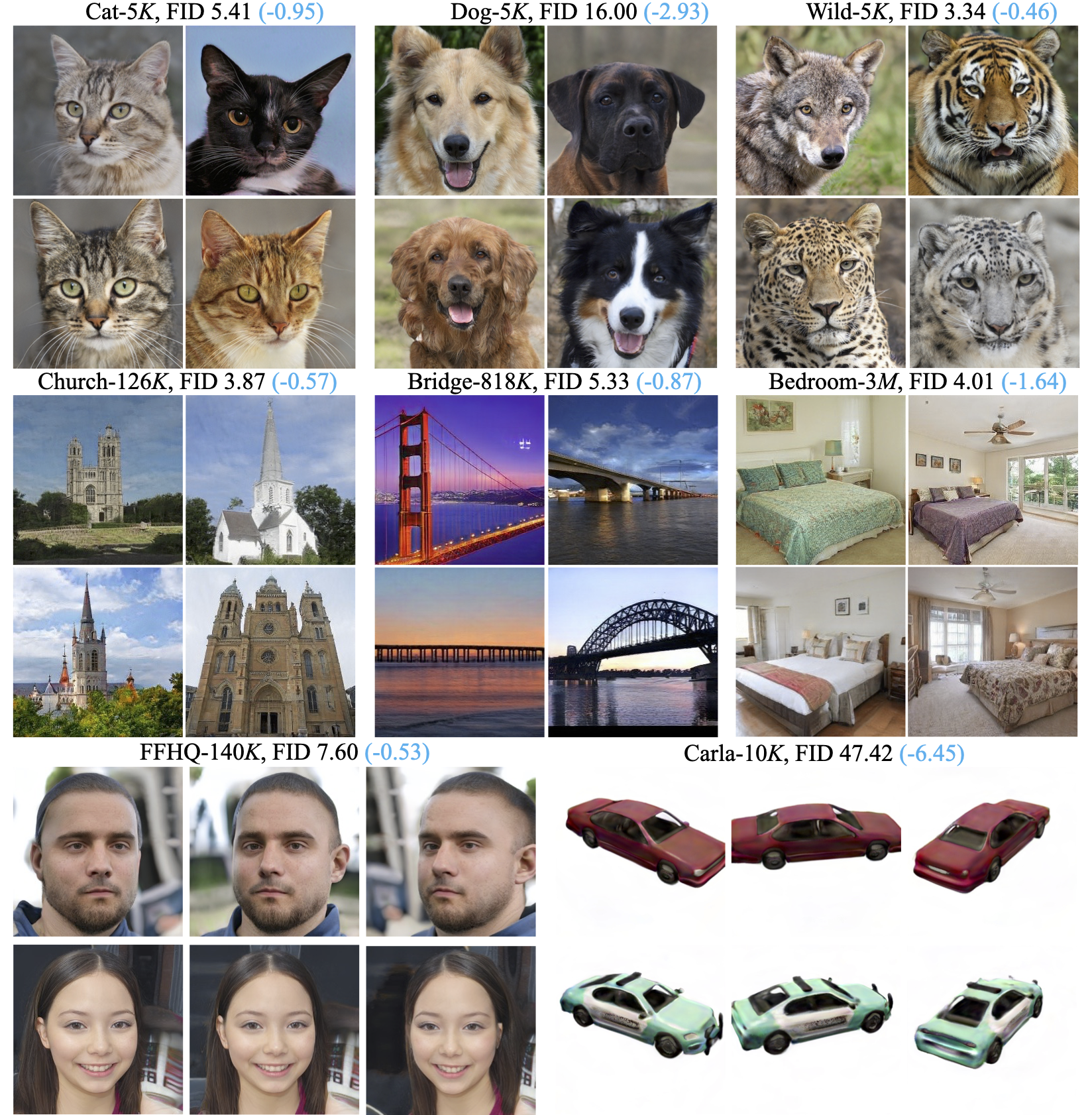

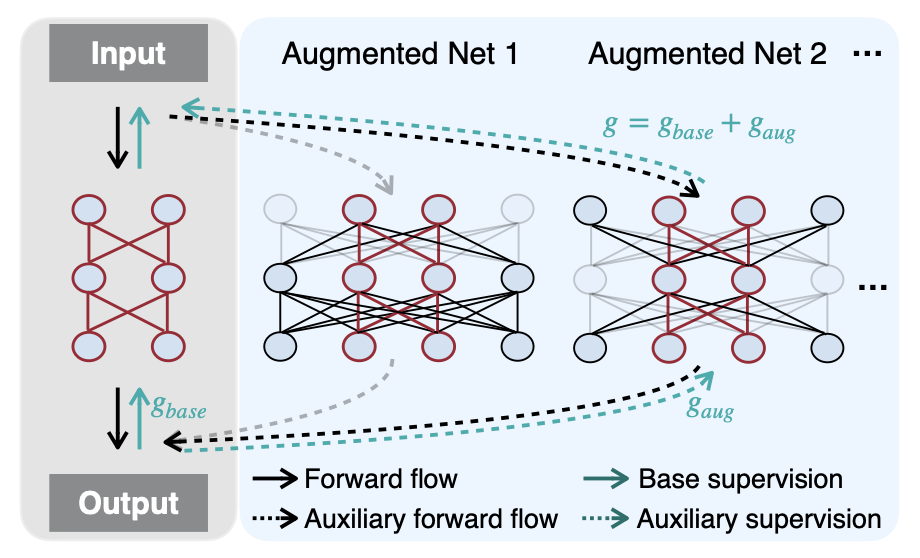

title = {Improving GANs with A Dynamic Discriminator},

author = {Yang, Ceyuan and Shen, Yujun and Xu, Yinghao and Zhao, Deli and Dai, Bo and Zhou, Bolei},

article = {arXiv preprint arXiv:2209.09897},

year = {2022}

}

Comment: Introducing network augmentation for improving the performance of tiny neural networks.