1 Sun Yat-sen University

2 The Chinese University of Hong Kong

2 The Chinese University of Hong Kong

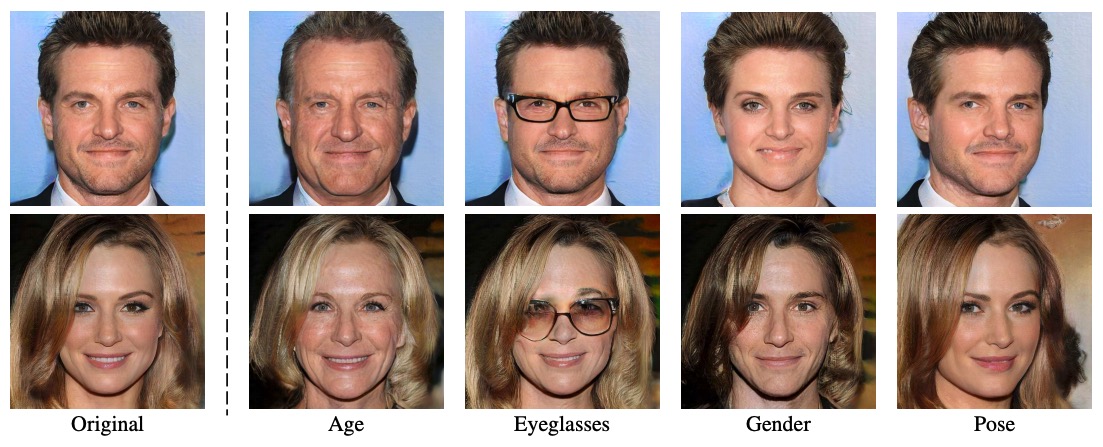

Fair Image Generation

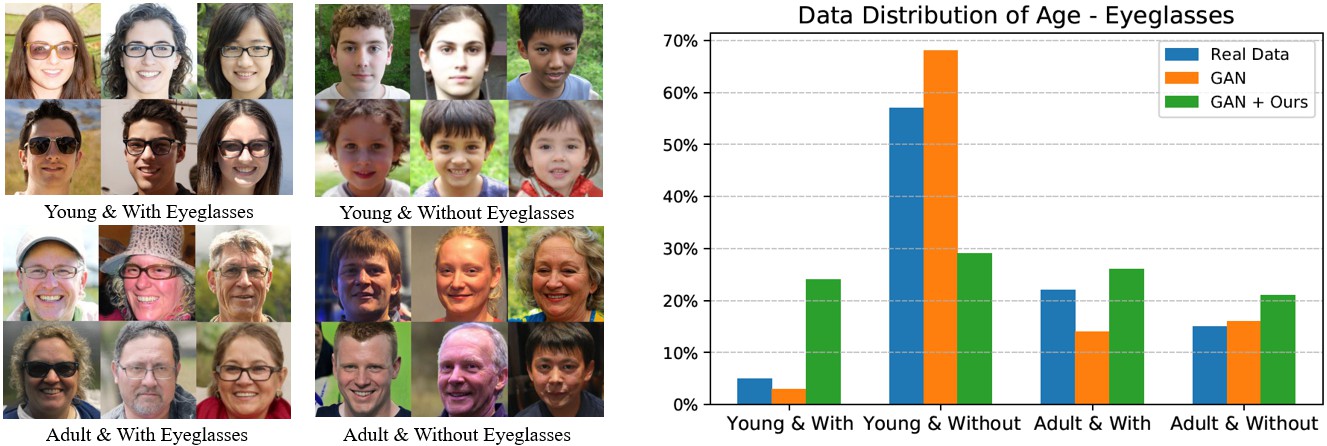

Age - Eyeglasses

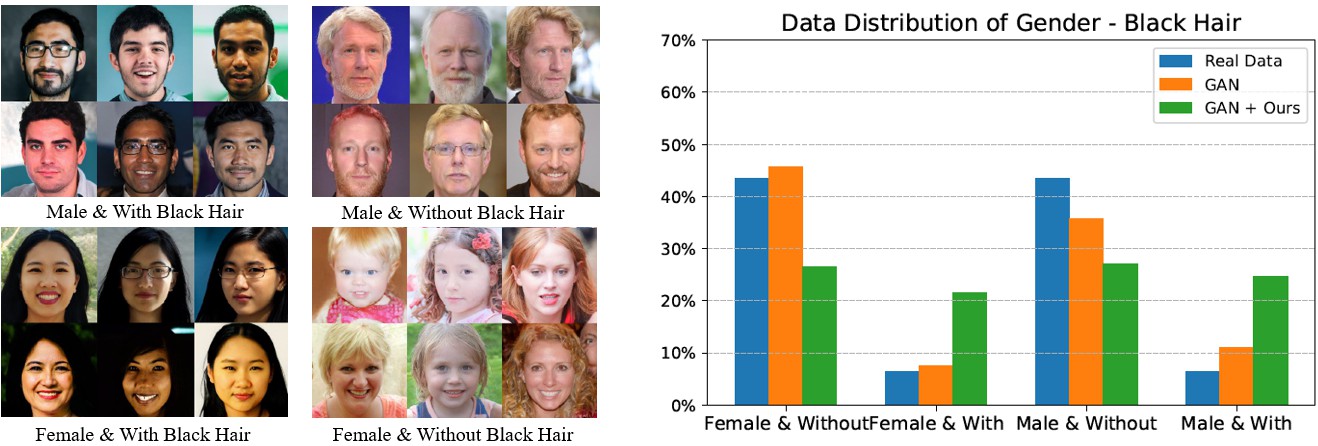

Gender - Black Hair

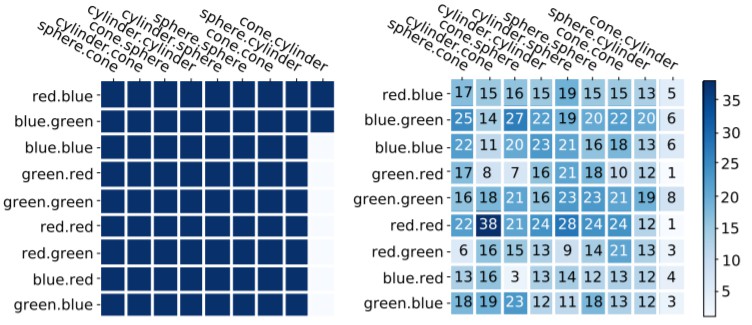

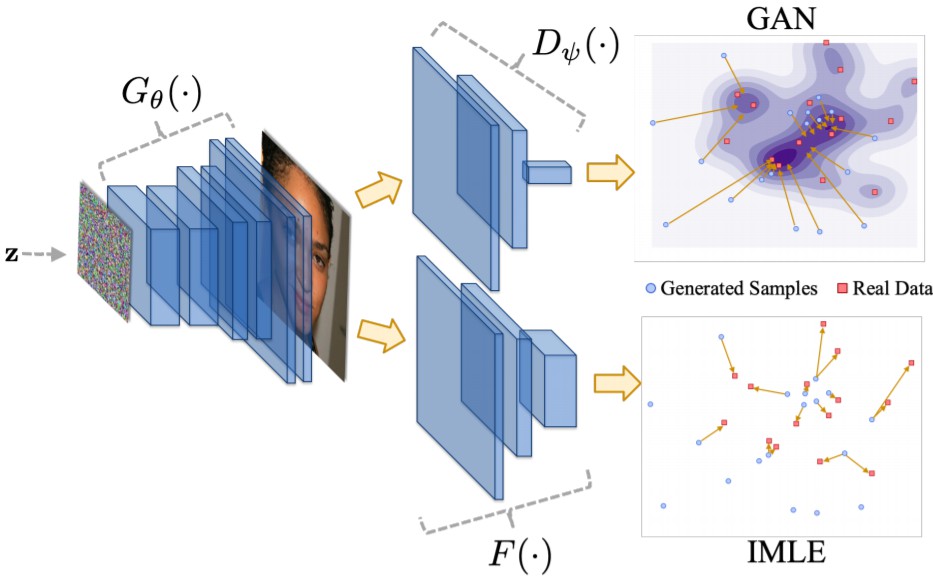

Identifying Bias in Existing Models

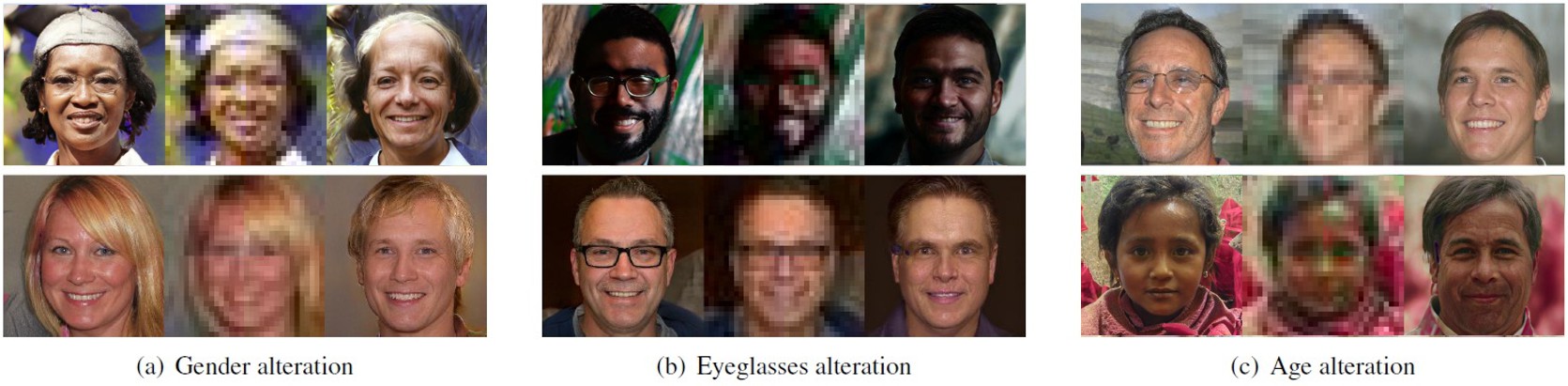

Mis-classified Images by Commercial APIs

Attribute Alternation by a Face Super-resolution Model

@article{tan2020fairgen,

title = {Improving the Fairness of Deep Generative Models without Retraining},

author = {Tan, Shuhan and Shen, Yujun and Zhou, Bolei},

journal = {arXiv preprint arXiv:2012.04842},

year = {2020}

}

Comment: Proposes style-based generator for high-quality image synthesis.