1 The Chinese University of Hong Kong,

2 ByteDance Inc.

3 Hong Kong University of Science and Technology, 4 Microsoft Research Asia

5 University of Southern California

3 Hong Kong University of Science and Technology, 4 Microsoft Research Asia

5 University of Southern California

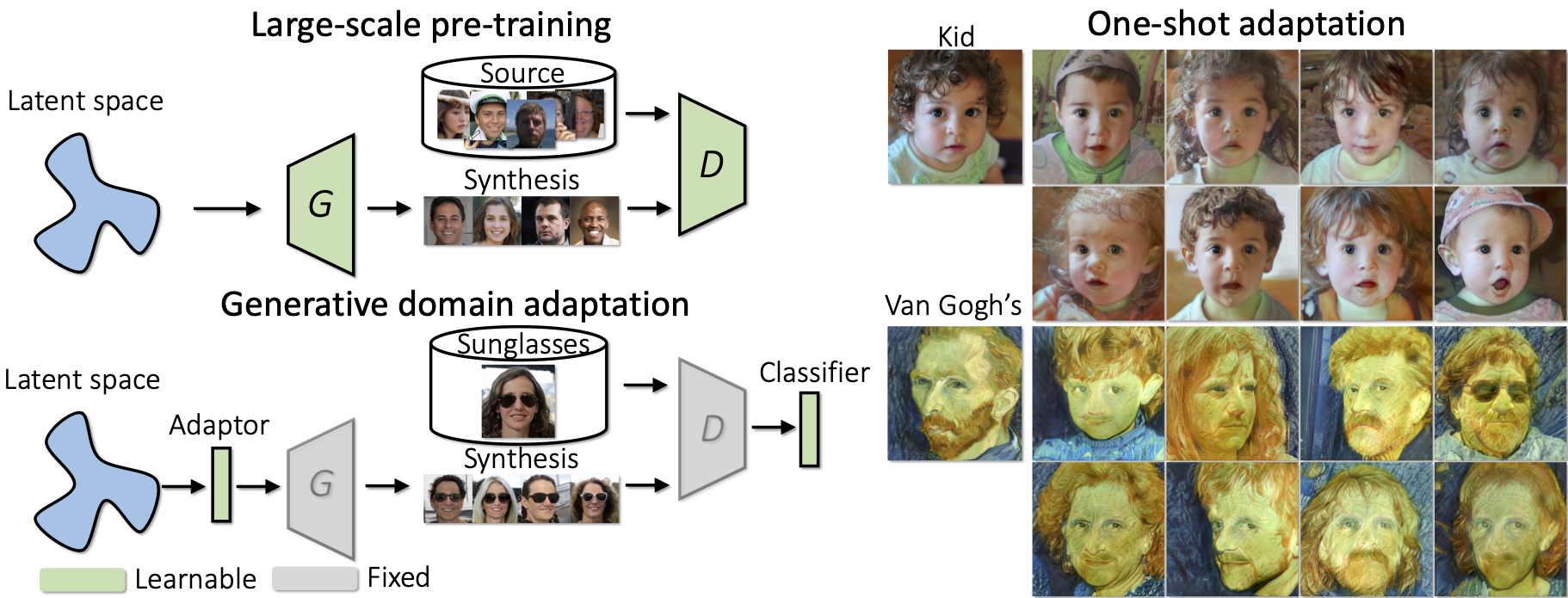

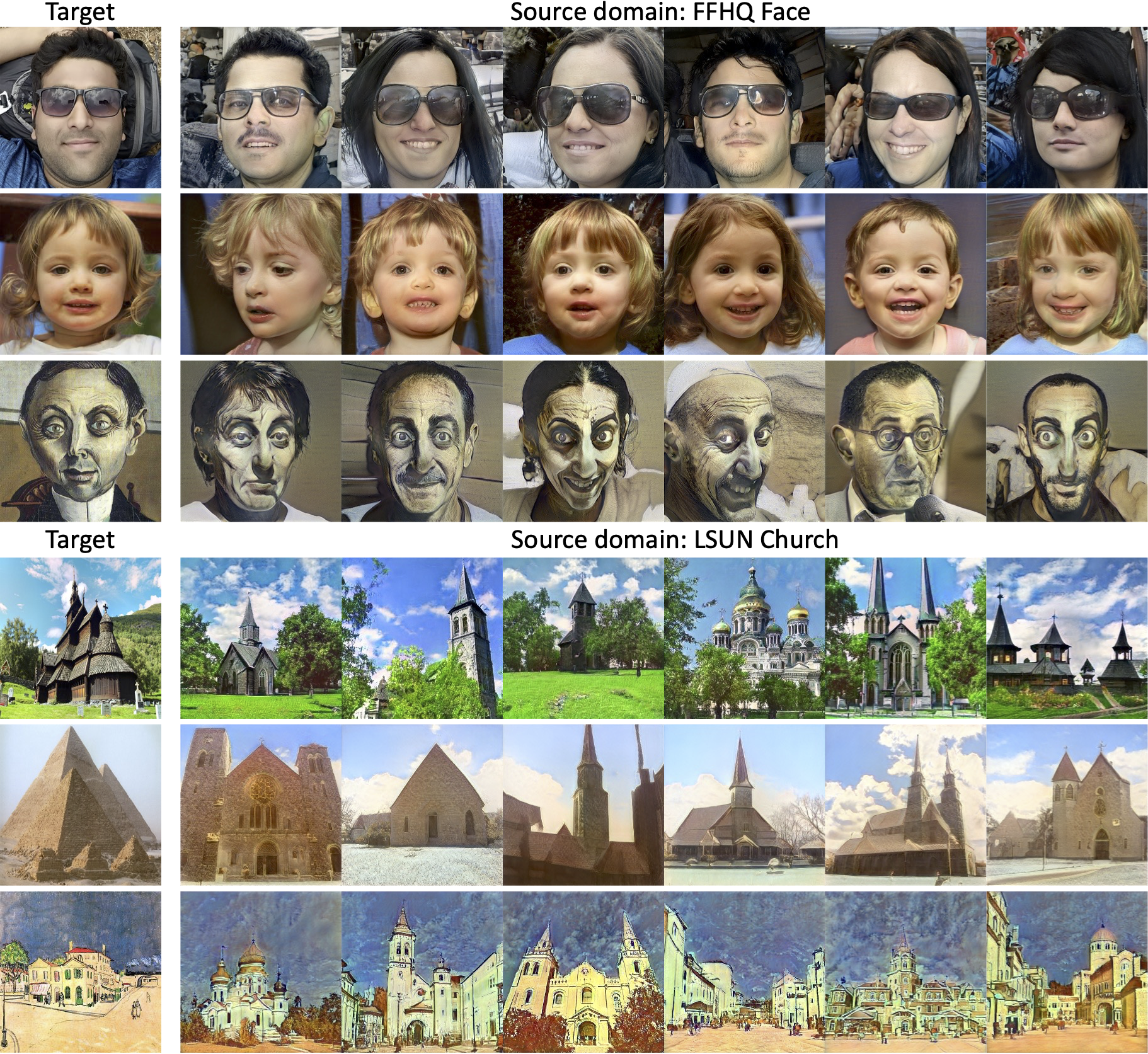

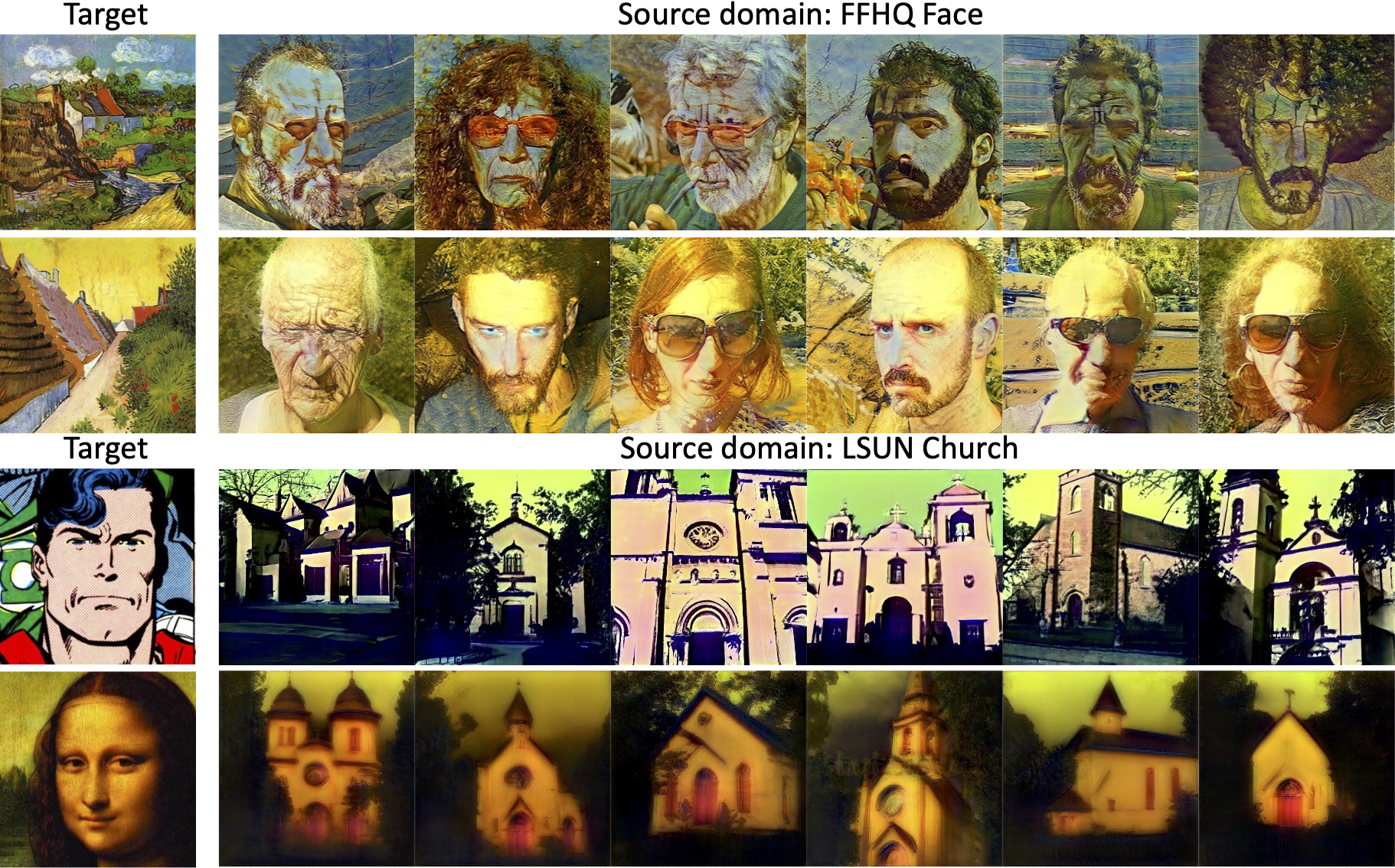

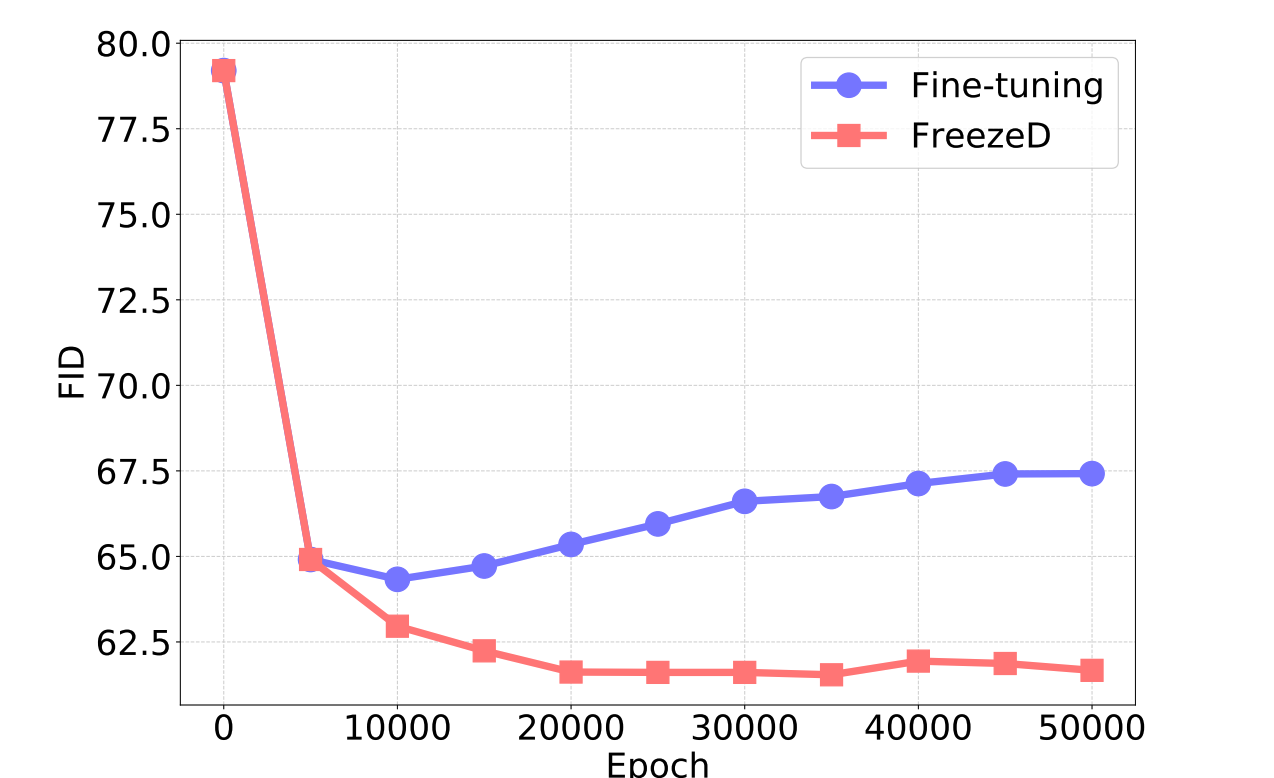

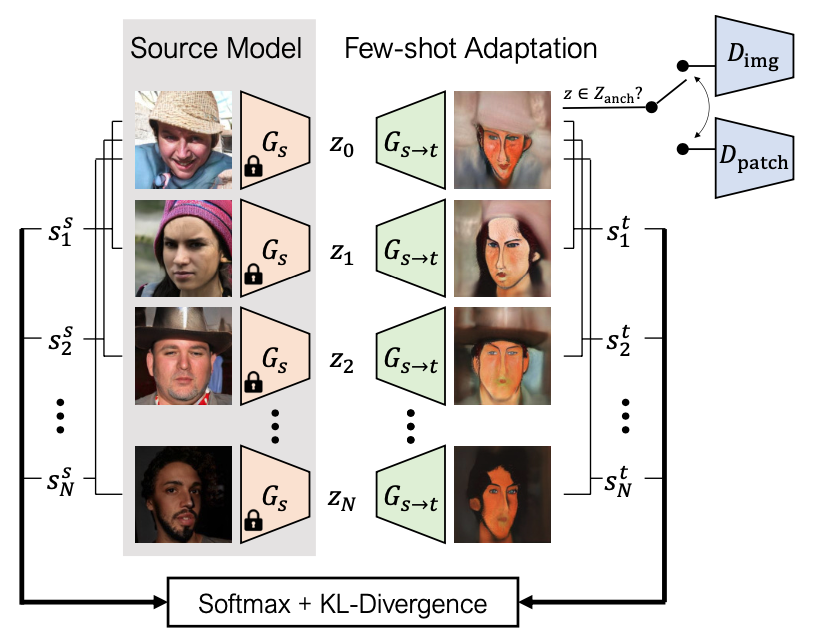

Comment: Proposes to freeze the lower layers of the discriminator for generative domain adaptation.