1 The Chinese University of Hong Kong

2 Zhejiang University

3 ByteDance Inc.

|

|

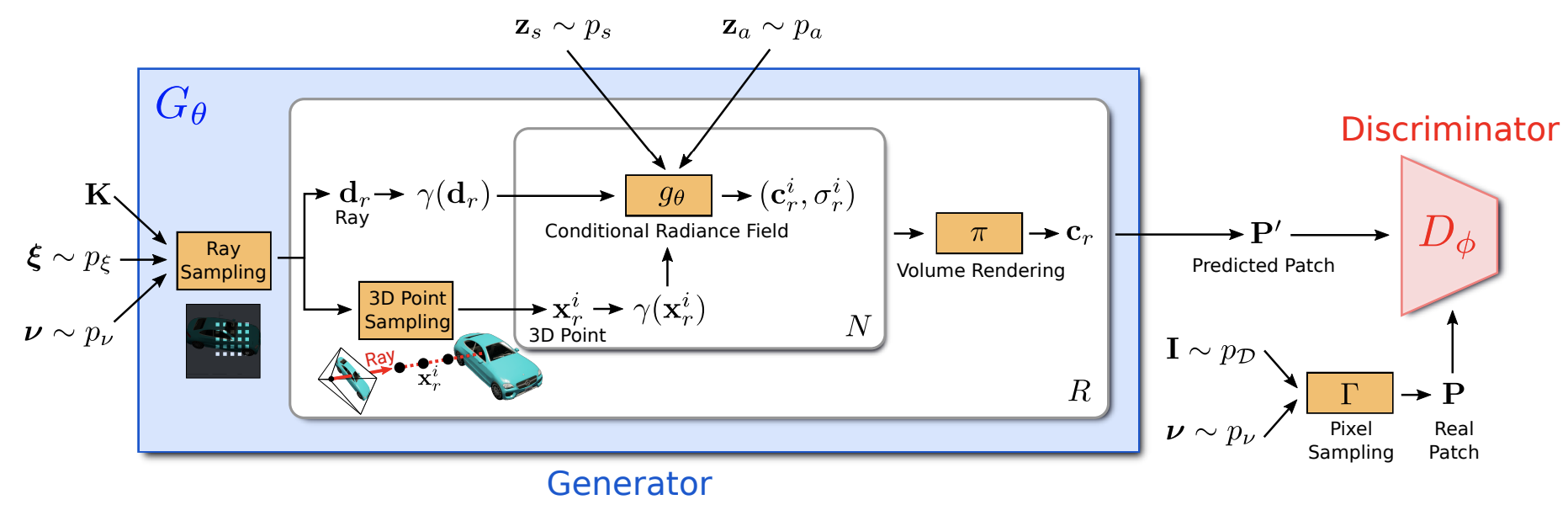

@article{xu2021volumegan,

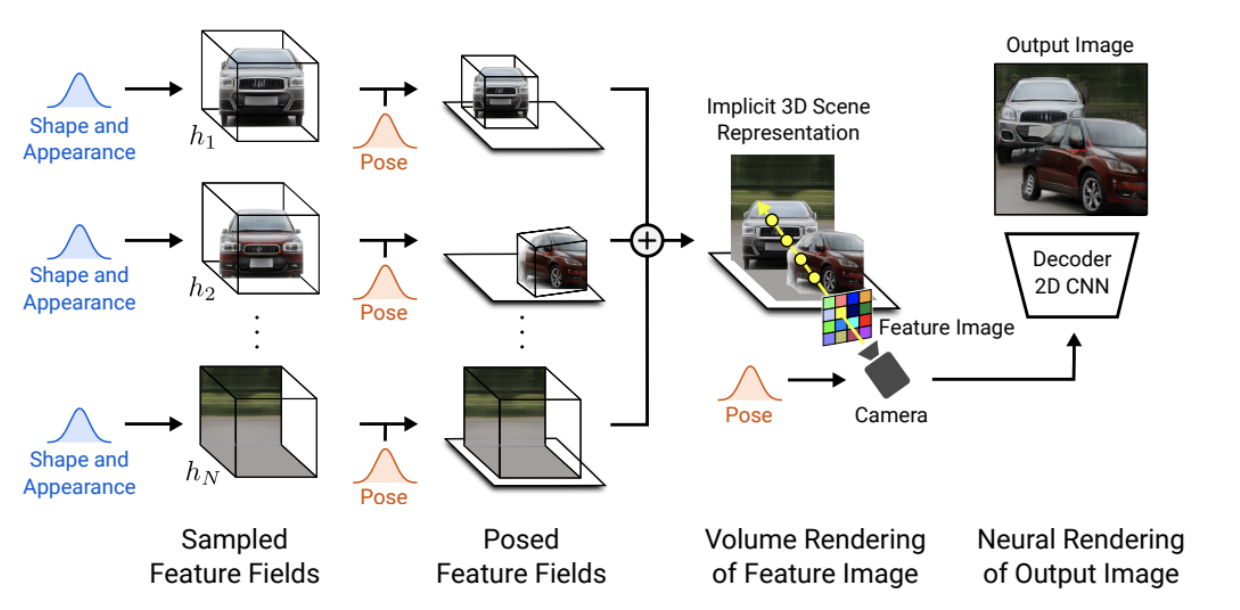

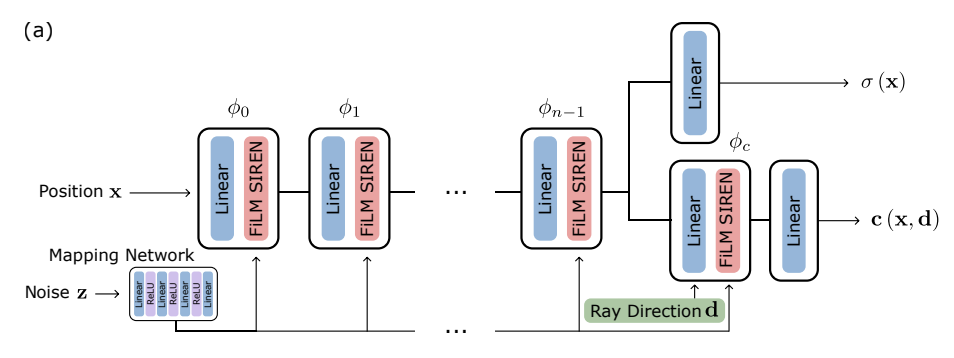

title = {3D-aware Image Synthesis via Learning Structural and Textural Representations},

author = {Xu, Yinghao and Peng, Sida and Yang, Ceyuan and Shen, Yujun and Zhou, Bolei},

article = {arXiv preprint arXiv:2112.10759},

year = {2021}

}

Comment: Proposes voxelized and implicit 3D representations and then render it to 2D image space with a reshape operation.